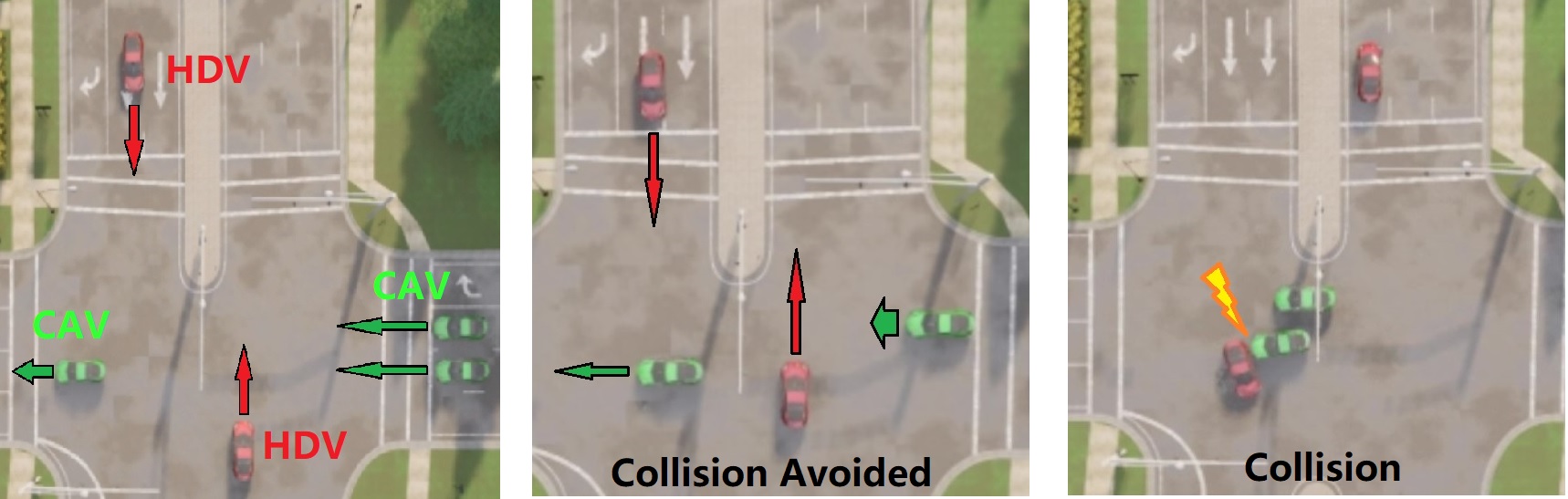

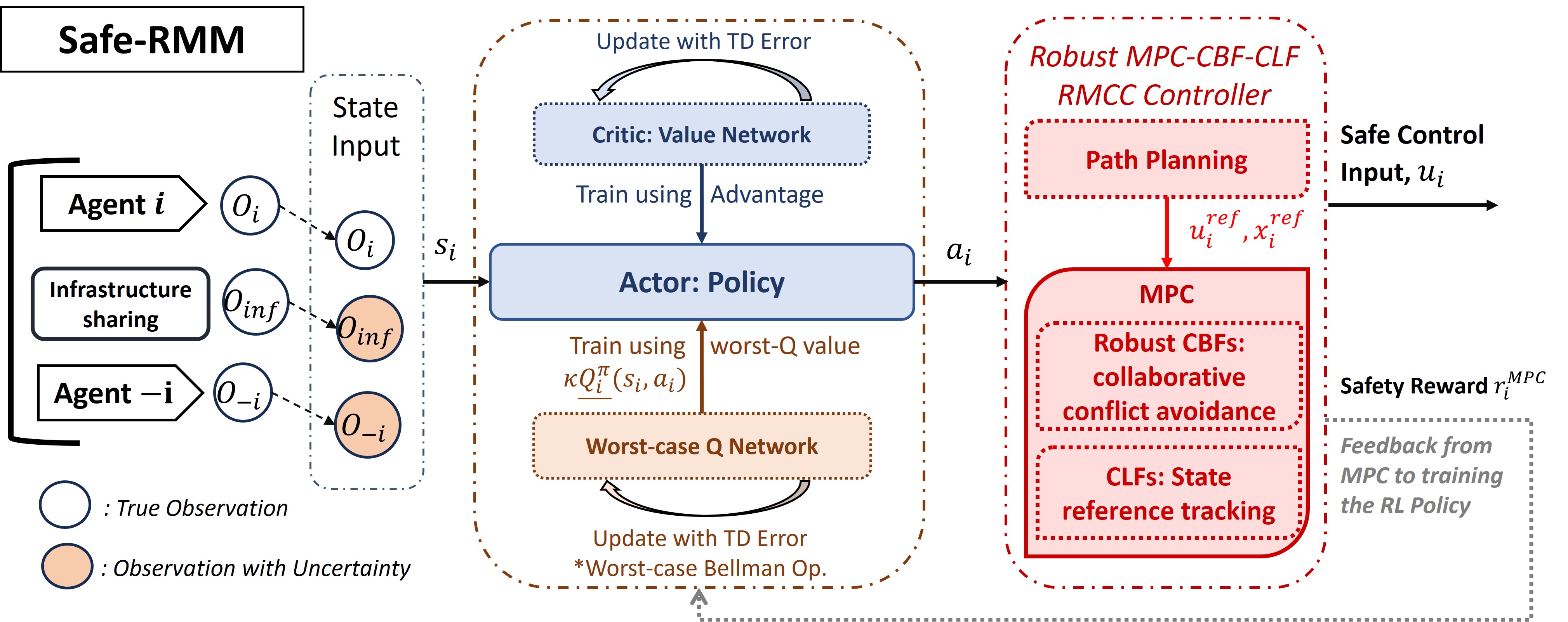

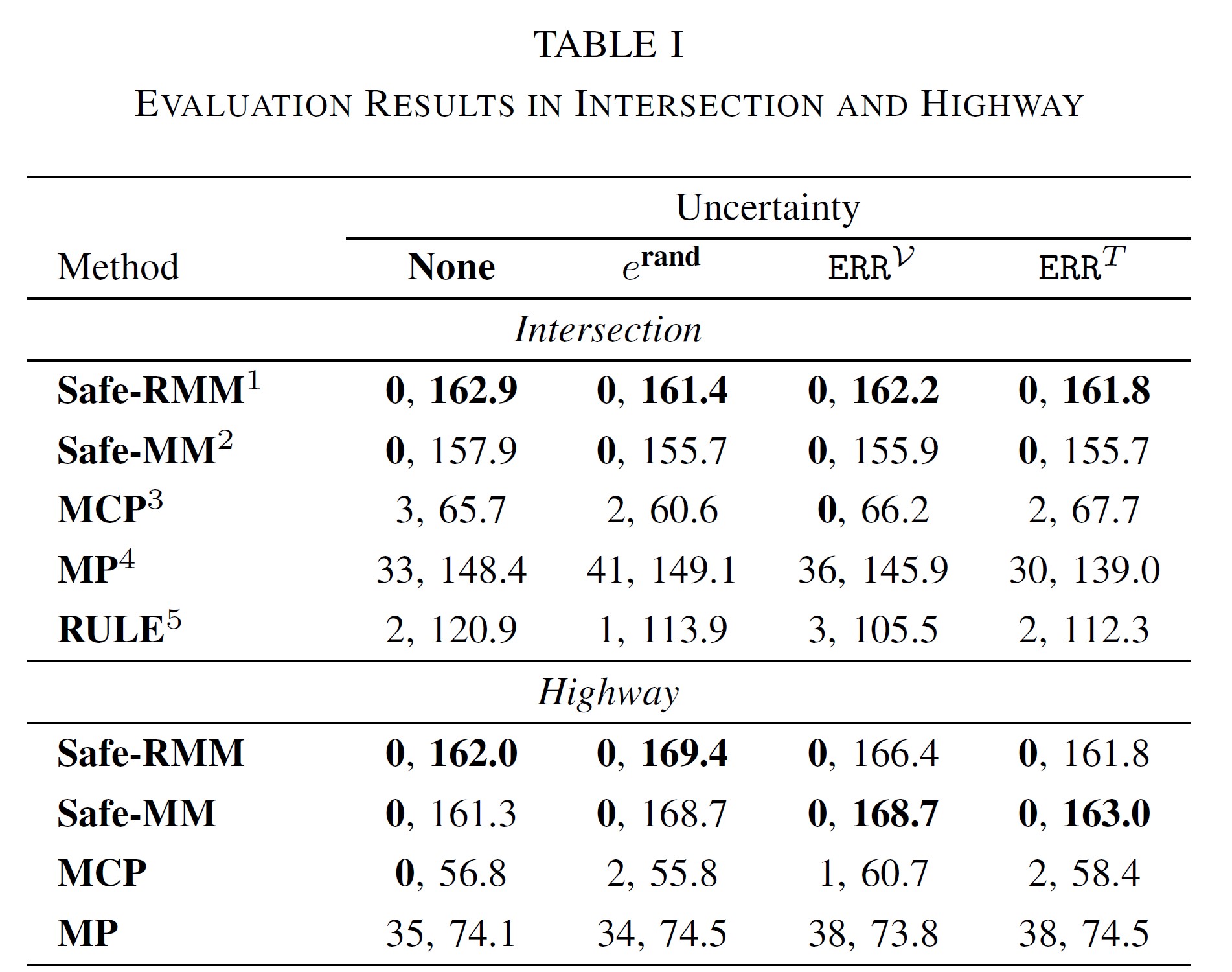

We address the problem of coordination and control of Connected and Automated Vehicles (CAVs) in the presence of imperfect observations in mixed traffic environment. A commonly used approach is learning-based decision-making, such as reinforcement learning (RL). However, most existing safe RL methods suffer from two limitations: (i) they assume accurate state information, and (ii) safety is generally defined over the expectation of the trajectories. It remains challenging to design optimal coordination between multi-agents while ensuring hard safety constraints under system state uncertainties (e.g., those that arise from noisy sensor measurements, communication, or state estimation methods) at every time step. We propose a safety guaranteed hierarchical coordination and control scheme called Safe-RMM to address the challenge. Specifically, the high-level coordination policy of CAVs in mixed traffic environment is trained by the Robust Multi-Agent Proximal Policy Optimization (RMAPPO) method. Though trained without uncertainty, our method leverages a worst-case Q network to ensure the model's robust performances when state uncertainties are present during testing. The low-level controller is implemented using model predictive control (MPC) with robust Control Barrier Functions (CBFs) to guarantee safety through their forward invariance property. We compare our method with baselines in different road networks in the CARLA simulator. Results show that our method provides the best evaluated safety and efficiency in challenging mixed traffic environments with uncertainties.

The figure demonstrates an agent's decision pipeline while other agents share the same procedure. During training, both Value network and worst-Q network join the update of actor's policy. During testing, Agent \( i \) takes states with uncertainty to its actor and samples the high level action \( a_i \), which is subsequently handled by robust MPC controller for path-planning and generating safe control \( u_i \).

@article{zhang2023safety,

title={Safety Guaranteed Robust Multi-Agent Reinforcement Learning with Hierarchical Control for Connected and Automated Vehicles},

author={Zhang, Zhili and Ahmad, HM and Sabouni, Ehsan and Sun, Yanchao and Huang, Furong and Li, Wenchao and Miao, Fei},

journal={arXiv preprint arXiv:2309.11057},

year={2024}

}